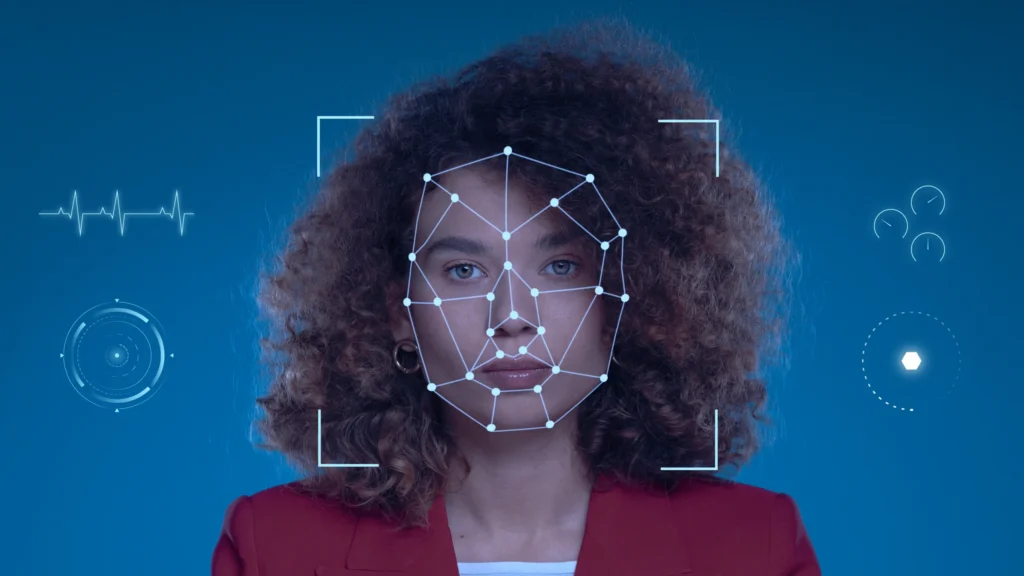

A shocking new analysis has revealed that women and girls make up nearly 99 percent of victims in nonconsensual AI-generated deepfakes, a trend experts say demands immediate congressional action.

The San Francisco Chronicle reported that by 2023, over 95,000 explicit deepfake videos had been posted online, with the overwhelming majority created without consent.

International data backs this alarming figure. Australia’s eSafety Commissioner warned of a 550 percent surge in deepfake pornography since 2019, with nearly all cases targeting women and minors.

A recent Guardian report detailed a legal case where a man accused of posting deepfake images of Australian women could face penalties of up to $450,000, marking one of the country’s first high-profile prosecutions.

Lawmakers in the United States are now under growing pressure to respond.

While the newly introduced Take It Down Act provides a pathway for victims to request removal of explicit deepfake content, rights groups argue it falls short without stricter penalties and prevention measures.

Advocates told the Chronicle that minors are increasingly targeted, with some cases involving students as young as 15, highlighting the need for stronger protections.

Efforts to legislate are also spreading globally. In New South Wales, opposition leaders have proposed a bill to criminalize the creation and distribution of synthetic sexual content after public outrage over cases involving teenagers.

As News.com.au reported, lawmakers described the technology as “vile” and warned that its rapid spread was leaving victims traumatized with little legal recourse.

Digital rights groups argue that while deepfake tools can be used for creative innovation, their weaponization against women has become a defining crisis in the AI age.

Analysts warn that without urgent regulatory action, victims will continue to shoulder the burden of fighting a flood of exploitative content spreading across social platforms.